Xenophobic Political Theater Undermines Security and Innovation

Recent legislation proposing to “decouple” American AI from China presents itself as a national security measure. However, it follows a disturbing historical pattern of technological nationalism that has repeatedly harmed both innovation and human dignity in American history.

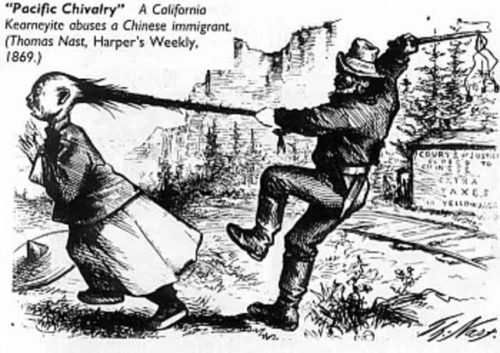

The rhetoric surrounding this AI legislation eerily mirrors the Chinese Exclusion Act of 1882, the first major law restricting immigration based on nationality.

Both share a fundamental contradiction: wanting Chinese labor and expertise while simultaneously seeking to exclude Chinese people and contributions.

Stanford University’s own history exemplifies this contradiction. Chinese laborers were instrumental in building the transcontinental railroad that created the fortune of Leland Stanford. These workers faced brutal conditions, earned lower wages than their white counterparts, and were forced to work in the most dangerous conditions – leading to the horrific practice of measuring tunnel-blasting time as a “Chinaman minute,” calculating how many Chinese would be killed per unit of progress.

Yet after the railroads were built, these same workers were blocked from citizenship, property ownership, and economic advancement through systematic legal discrimination. Stanford University itself, built with wealth from Chinese labor, would later enforce strict quotas on Chinese students.

Notably the proposed AI bill literally suggests 20 years of jail for anyone found using a computer that has evidence of “models” from China.

According to the language of the proposed bill, people who download AI models from China could face up to 20 years in jail, a million dollar fine, or both.

Imagine the kind of politician who dreams of a future where simply dropping a model on someone’s computer gets them swept off to jail for decades.

This pattern of wanting Chinese contribution while seeking to criminalize and exclude Chinese influence is technically impossible in modern AI development. The reality of artificial intelligence creation defies such artificial boundaries. Modern AI stands on the shoulders of Chinese-American innovators, perhaps most notably in the case of ImageNet. This revolutionary dataset, which transformed computer vision and helped launch the deep learning revolution, was created by Fei-Fei Li, who immigrated from China to the US and led the project at Stanford. The story of ImageNet exemplifies how arbitrary and harmful national divisions in technology can be.

The technical integration goes even deeper when we examine a typical large language model. Its attention mechanism derives from Google’s transformer architecture, developed in the US, while incorporating optimization techniques pioneered at Tsinghua University. The model runs on GPUs designed in America but manufactured in Taiwan, implementing deep learning principles advanced through international collaboration between researchers like Yann LeCun in the US and Jian Sun at Microsoft Research Asia. The training data necessarily incorporates Chinese web content and academic research, while the underlying software libraries include crucial contributions from developers worldwide, including significant optimizations from teams at Alibaba and Baidu.

The recent panic over DeepSeek perfectly illustrates this contradiction. While lawmakers rush to ban a Chinese AI model from American devices, they ignore that any open model can be fully air-gapped and run offline without any connection to its origin servers. Meanwhile, Zoom – which handles everything from classified research discussions to defense contractor meetings on university campuses – frequently processes data through Chinese code and infrastructure with minimal or no security review.

Or consider Tesla, whose Nazi-promoting eugenicist South African CEO openly praises China as his favorite government with the largest market and manufacturing base while simultaneously acquiring unprecedented access to U.S. government contracts and infrastructure projects. There couldn’t be a more obvious immediate national security threat than Elon Musk.

This inconsistent approach – where some Chinese technology connections trigger alarm while others are ignored – reveals how these policies are more about political theater than actual security. Real security would focus on technical capabilities and specific vulnerabilities, not simplistic national origins.

Modern AI development relies on an intricate web of globally distributed computing infrastructure. The hardware supply chains span continents, while the open-source software that powers these systems represents the collective effort of developers across the world. Research breakthroughs emerge from international collaborations, building on shared knowledge that flows across borders as freely as the data these systems require.

Let’s be clear: espionage threats are real, and data security across borders is a legitimate concern. However, the fundamental weakness of nationalist approaches to security is their reliance on binary, overly simplistic classifications. “Chinese” versus “American” AI creates exactly the kind of rigid, brittle reactive security model that sophisticated attackers exploit most easily, making everything less safe.

Robust security systems thrive on nuance and depth. They employ multiple layers of validation, contextual analysis, and sophisticated inference to detect and prevent threats. A security model that sorts researchers, code, or data into simple national categories without thought is like France thinking defense meant an expensive concrete Maginot line, without trucks or planes. No adaptive, intelligent internal security means one simple breach compromises everything. Real security requires technical solutions that can handle nuance, detect subtle patterns, and proactively adapt to emerging threats.

The defense of Ukraine against Russian invasion demonstrates this principle perfectly. When Russian forces attempted to seize Hostomel Airport near Kyiv, Ukraine’s defense succeeded not through static border fortifications, but through rapid threat identification and adaptive response. While main forces were positioned at the borders, regular troops at Hostomel identified and engaged helicopter-borne attackers immediately, holding out for two crucial hours until reinforcement brigades arrived. This adaptive defense against an unexpected vector saved Kyiv from potential capture. Similarly, effective AI security requires moving beyond simplistic border-based restrictions to develop dynamic, responsive security frameworks that can identify and counter threats regardless of their origin point.

Consider how actual security breaches typically occur: not through broad categories of static nationality, but through specific vulnerabilities, social engineering, and careful exploitation of trust boundaries. Effective security measures therefore need to focus on technical rigor, behavioral analysis, and sophisticated validation frameworks – approaches that become harder, not easier, when we artificially restrict collaboration and create simplistic trust boundaries based on nationality.

Just as the Chinese Exclusion Act failed to address real economic challenges while causing immense human suffering, this attempt at technological segregation would fail to address real security concerns while hampering innovation and perpetuating harmful nationalist narratives.

The future of AI development lies not in futile attempts at nationalistic segregation and incarceration, but in thoughtful collaboration guided by strong technical standards and security frameworks. The global nature of AI development isn’t a vulnerability to be feared, it’s a strength to be lead by embracing technical excellence and rigorous security practices that focus on capabilities rather than simplistic national origins.

As we stand at the dawn of the AI era, we face the same choice that has confronted American innovation throughout history: we can repeat the mistakes of xenophobic restrictions that ultimately harmed both American security and human dignity, or we can embrace the inherently open nature of technological progress while building the technical frameworks needed to ensure its responsible development.

Senator Hawley has repeatedly demonstrated concerning behavior, whether it’s fundraising off January 6th certification objections while the Capitol was under siege, or facing ethics complaints about misusing state resources as Missouri AG.

His rhetoric targeting Asian Americans and Chinese students, alongside his theatrical performance during Judge Jackson’s confirmation hearings, suggests a pattern of leveraging racial grievance for political gain.

His purported concerns about foreign influence ring hollow given his willingness to put personal ambition ahead of ethical governance.