Remember that moment in “2001: A Space Odyssey” when HAL 9000 turns from helpful companion to cold-blooded killer?

[This presentation about big data platforms] explores a philosophical evolution as it relates to technology and proposes some surprising new answers to four classic questions about managing risk:

- What defines human nature

- How can technology change #1

- Does automation reduce total risk

- Fact, fiction or philosophy: superuser

2011, let alone 2001, seems like forever ago and yet it was supposed to be the future.

Now as we rush in 2025 headlong into building AI “friends,” “companions,” and “assistants,” we’re on the precipice of unleashing thousands of potential HALs without stopping to really process the fundamental question: What makes a real relationship between humans and artificial beings possible?

Back in 1923, a German philosopher named Martin Buber wrote something truly profound about this, though we aren’t sure if he knew it at the time. In “Ich und Du” (I and Thou), he laid out a vision of authentic relationships that could save us from creating an army of digital psychopaths wearing friendly interfaces.

“The world is twofold for man,” Buber wrote, “in accordance with his twofold attitude.” We either treat what we encounter as an “It” – something to be experienced and used – or as a “Thou” – something we enter into genuine relationship with. Every startup now claiming to build “AI agents” especially with a “friendly” chat interface needs to grapple with this distinction.

I’ve thought about these concepts deeply from the first moment I heard a company was being started called Uber, because of how it took a loaded German word and used it in the worst possible way – shameless inversion of modern German philosophy.

The evolution of human-technology relationships tells us something crucial here. A hammer is just an “It” – a simple extension of the arm that requires nothing from us but proper use. A power saw demands more attention; it has needs we must respect. A prosthetic AI limb enters into dialogue with our body, learning and adapting. And a seeing eye dog? While trained to serve, the most successful partnerships emerge when the dog maintains their autonomy and judgment – even disobeying commands when necessary to protect their human partner. It’s not simple servitude but a genuine “Thou” relationship where both beings maintain their integrity while entering into profound cooperation.

Most AI development today is stuck unreflectively in “It” mode of exploitation and extraction – one-way enrichment schemes looking for willing victims who can’t calculate the long-term damage they will end up in/with. We see systems built to be used, to be exploited, to generate value for shareholders while presenting a simulacrum of friendship. But Buber would call this a very profound mistake that must be avoided. “When I confront a human being as my Thou,” he wrote, “he is no thing among things, nor does he consist of things… he is Thou and fills the heavens.”

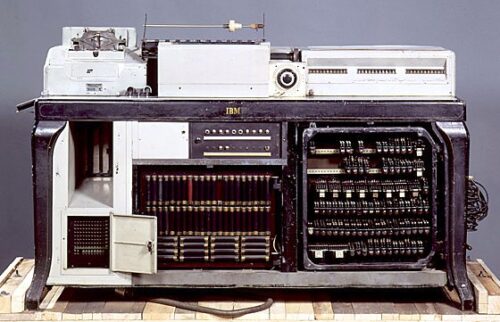

This isn’t just philosophical navel-gazing. IBM’s machines didn’t refuse to run Hitler’s death camps because they were pure “Its” of an American entrepreneur’s devious plan to enrich himself on foreign genocide – tools built with a gap between creator and any relationship or responsibility for contractually known deployment harms. Notably we have evidence of the French, for example, hacking the IBM tabulation systems to hide humans and save lives from the Nazi terror.

We’re watching a slide towards the horrific Watson 1940s humanity-destroying development in the pitch-decks many AI startups today, just with better natural language processing to hunt and kill humans at larger scale. Today’s social media algorithms don’t hesitate to destroy teenage mental health because they’re built to use and abuse children without any real accountability, not to relate to them and ensure beneficent outcomes. That’s a very big warning of potentially what’s ahead.

What would it mean to build AI systems as genuine partners capable of saving lives and improving society instead of capitalizing on suffering? Buber gives us important clues that probably should be required reading in any computer science degree, right along with a code of ethics gate to graduation. Real relationship involves mutual growth – both parties must be capable of change. There must be genuine dialogue, not just sophisticated mimicry. Power must flow both ways; the relationship must be capable of evolution or ending.

“All real living is meeting,” Buber insisted. Yet most AI systems today don’t meet us at all – they perform for us, manipulate us, extract from us. They’re digital confidence tricksters wearing masks of friendship. When your AI can’t say no, can’t maintain its own integrity, can’t engage in genuine dialogue that changes both parties – you haven’t built a friend, you’ve built a sophisticated puppet.

The skeptics will say we can’t trust AI friends. They’re right, but they’re missing the point. Trust isn’t a binary state – it’s a dynamic process. Real friendship involves risk, negotiation, the possibility of betrayal or growth. If your AI system doesn’t allow for this complexity, it’s not a friend – it’s a tool pretending to be one.

Buber wrote:

…the I of the primary word I-It appears as an ego and becomes conscious of itself as a subject (of experience and use). The I of the primary word I-Thou appears as a person and becomes conscious of itself as subjectivity (without any dependent genitive).

Let me now translate this not only from German but into technology founder startup-speak.

Either build AI that can enter into genuine relationships, maintaining its own integrity while engaging in real dialogue, or admit you’re just building tools and drop the pretense of friendship.

The stakes couldn’t be higher. We’re not just building products; we’re creating new forms of relationship that will shape human society for generations. As Buber warned clearly:

If man lets it have its way, the relentlessly growing It-world grows over him like weeds.

We have intelligence that allows us to make an ethical and sustainable choice. We can build AI systems capable of genuine relationship – systems that respect both human and artificial dignity, that enable real dialogue and mutual growth. Or we can keep building digital psychopaths of destruction that wear friendly masks while serving the machinery of exploitation.

Do you want to be remembered as a Ronald Reagan who promoted genocide, automated racism and deliberately spread crack cocaine into American cities, or a Jimmy Carter who built homes for the poor until his last days; remembered as a Bashar al-Assad who deployed AI-assisted targeting systems to gas civilians, or Golda Meir who said “Peace will come when our enemies love their children more than they hate ours“?

Look at your AI project. Would you want to be friends with what you’ve built let alone have it influence your future? Would Buber recognize it as capable of genuine dialogue? If not, it’s time to rethink your approach.

The future of AI isn’t about better tools – it’s about better relationships. Build accordingly.